The most recent Tesla software program replace consists of adjustments to the Autopilot system geared toward maintaining drivers paying consideration.

Replace 2023.44.30.1 features a change known as Autopilot Suspension. “For optimum security and accountability, use of Autopilot options shall be suspended if improper utilization is detected,” the discharge notes learn.

“Improper utilization is if you, or one other driver of your automobile, obtain 5 ‘Pressured Autopilot Disengagements’.

“A disengagement is when the Autopilot system disengages for the rest of a visit after the driving force receives a number of audio and visible warnings for inattentiveness.

“Driver-initiated disengagements don’t rely as improper utilization and are anticipated from the driving force.”

If a Tesla detects a driver is misusing the Autopilot system, the characteristic isn’t merely deactivated for that drive.

“Autopilot options can solely be eliminated per this suspension methodology and they are going to be unavailable for about one week,” says Tesla within the launch notes.

The corporate additionally reminds drivers to not use hand-held gadgets behind the wheel and to maintain their fingers on the wheel and their eyes forward on the highway.

The adjustments relate to a recall issued by Tesla within the US following an investigation into Autopilot by the Nationwide Freeway Visitors Security Administration (NHTSA).

The recall, being remedied with this newest over-the-air software program replace, impacts 2,031,220 automobiles within the US.

No recall has been issued in Australia. Nevertheless, there’s no point out within the launch notes that these Autopilot tweaks received’t apply to Australian-market Teslas.

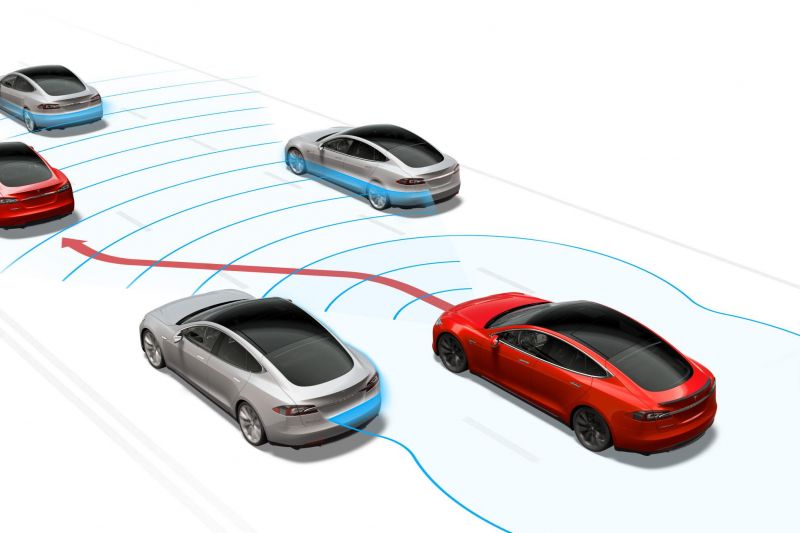

Tesla’s Autopilot is taken into account a Degree 2 autonomous driver help know-how beneath the degrees of autonomy outlined by The Society of Automotive Engineers (SAE).

This implies Tesla’s Autopilot system can steer, brake, and speed up by itself, however nonetheless requires the driving force to maintain their fingers on or close to the steering wheel, and be alert to the present state of affairs.

The motive force ought to intervene in the event that they imagine the automotive is ready to do one thing unlawful or harmful.

To find out if the driving force has correct management, Tesla measures torque utilized to the automobile’s steering wheel by the driving force.

If the automotive detects the driving force just isn’t holding the wheel for lengthy durations of time, the system will immediate the driving force to take over.

Regardless of this, the NHTSA has discovered that “the prominence and scope of the characteristic’s controls might not be enough to forestall driver misuse of the SAE Degree 2 superior driver-assistance characteristic”.

Autopilot has been the topic of an intensive, long-running investigation by the NHTSA. It has opened greater than 36 investigations into Tesla crashes, 23 of those involving fatalities.

The recall comes shortly after a former Tesla worker claimed the US electrical automobile (EV) producer’s self-driving know-how is unsafe to be used on public roads.

As reported by the BBC, Lukasz Krupski stated he has issues about how AI is getting used to energy Tesla’s Autopilot self-driving know-how.

“I don’t suppose the {hardware} is prepared and the software program is prepared,” stated Mr Krupski.

“It impacts all of us as a result of we’re primarily experiments in public roads. So even when you don’t have a Tesla, your youngsters nonetheless stroll within the footpath.”

Mr Krupski claims he discovered proof in firm knowledge that recommended necessities for the protected operation of automobiles with a sure degree of semi-autonomous driving know-how hadn’t been adopted.

These developments additionally come as a US choose dominated there’s “cheap proof” that Tesla CEO Elon Musk and different managers knew about harmful defects with the corporate’s Autopilot system.

A Florida lawsuit was introduced in opposition to Tesla after a deadly crash in 2019, the place the Autopilot system on a Mannequin 3 didn’t detect a truck crossing in entrance of the automotive.

Stephen Banner was killed when his Mannequin 3 crashed into an 18-wheeler truck that had turned onto the highway forward of him, shearing the roof off the Tesla.

Regardless of this Tesla had two victories in Californian court docket instances earlier this yr.

Micah Lee’s Mannequin 3 was alleged to have immediately veered off a freeway in Los Angeles whereas travelling 65mph (104km/h) whereas Autopilot was lively, putting a palm tree and bursting into flames, all in a span of some seconds.

Moreover, Tesla received a lawsuit in opposition to Los Angeles resident Justine Hsu, who claimed her Mannequin S swerved right into a kerb with Autopilot lively.

In each instances, Tesla was cleared of any wrongdoing.